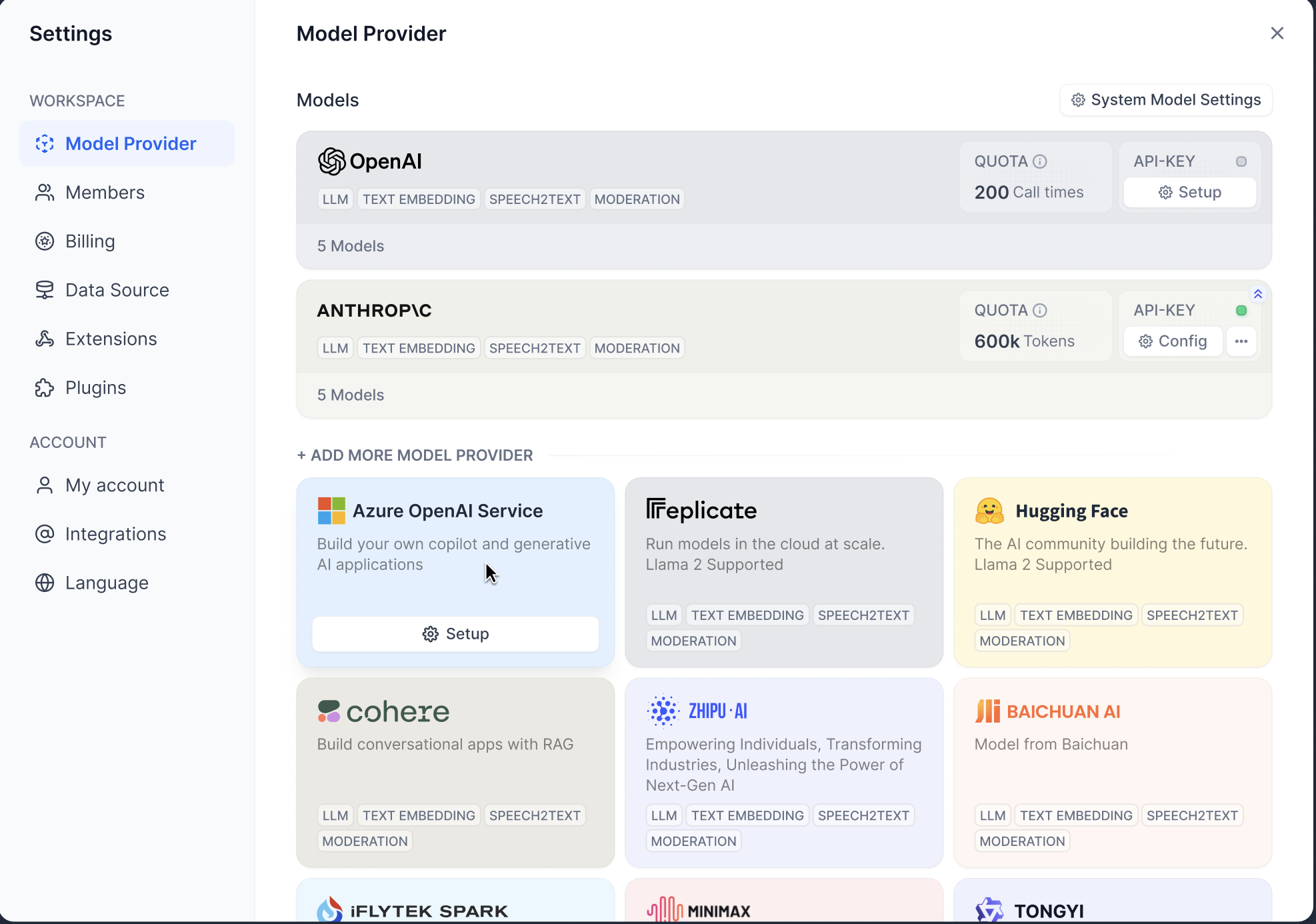

Dify supports major model providers like OpenAI’s GPT series and Anthropic’s Claude series. Each model’s capabilities and parameters differ, so select a model provider that suits your application’s needs. Obtain the API key from the model provider’s official website before using it in Dify.

Dify supports major model providers like OpenAI’s GPT series and Anthropic’s Claude series. Each model’s capabilities and parameters differ, so select a model provider that suits your application’s needs. Obtain the API key from the model provider’s official website before using it in Dify.

Model Types in Dify

Dify classifies models into 4 types, each for different uses:-

System Inference Models: Used in applications for tasks like chat, name generation, and suggesting follow-up questions.

Providers include OpenAI、Azure OpenAI Service、Anthropic、Hugging Face Hub、Replicate、Xinference、OpenLLM、iFLYTEK SPARK、WENXINYIYAN、TONGYI、Minimax、ZHIPU(ChatGLM)、Ollama、LocalAI、GPUStack.

-

Embedding Models: Employed for embedding segmented documents in knowledge and processing user queries in applications.

Providers include OpenAI, ZHIPU (ChatGLM), Jina AI(Jina Embeddings).

-

Rerank Models: Enhance search capabilities in LLMs.

Providers include Cohere, Jina AI(Jina Reranker).

-

Speech-to-Text Models: Convert spoken words to text in conversational applications.

Provider: OpenAI.

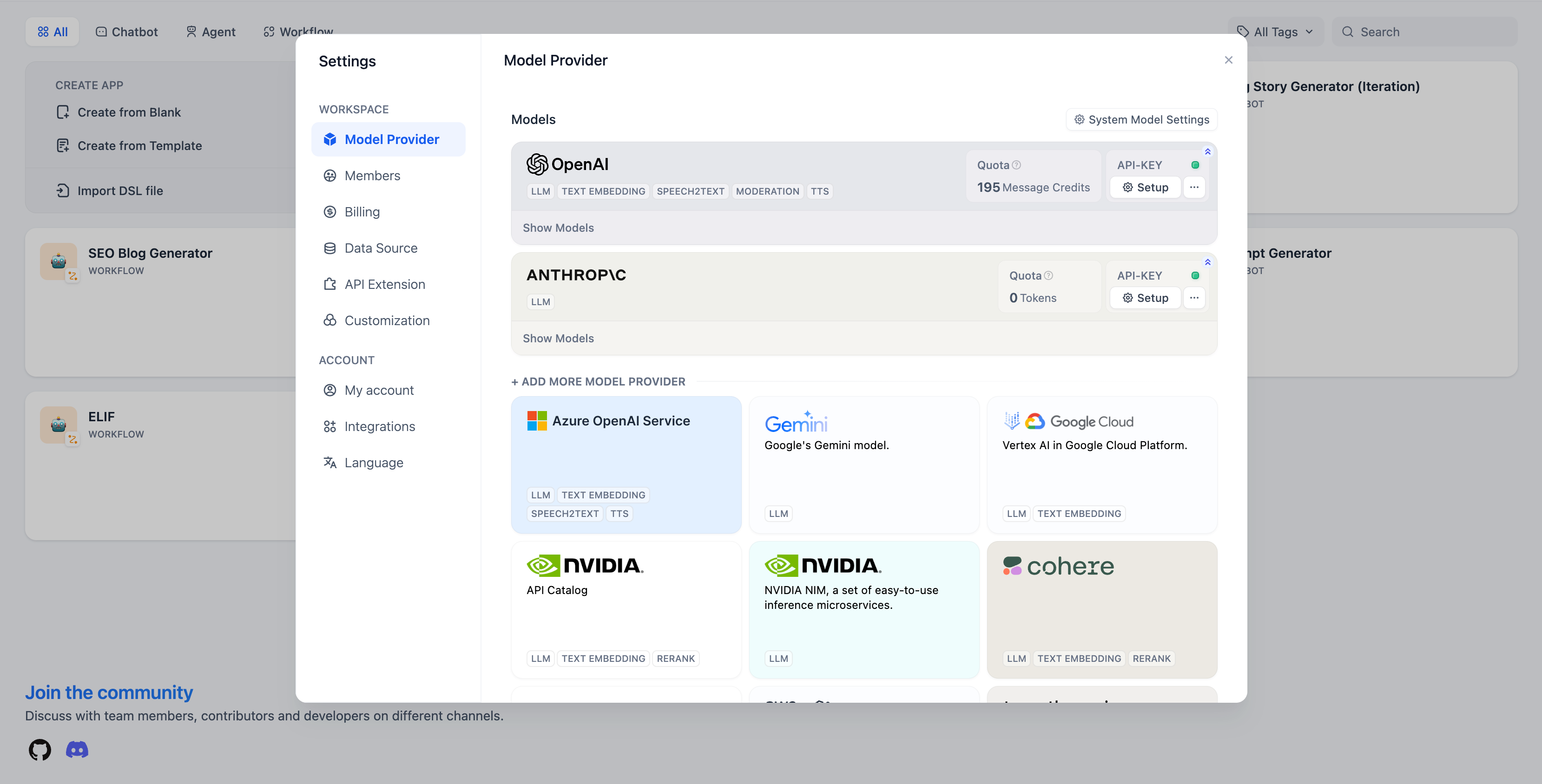

Hosted Model Trial Service

Dify offers trial quotas for cloud service users to experiment with different models. Set up your model provider before the trial ends to ensure uninterrupted application use.- OpenAI Hosted Model Trial: Includes 200 invocations for models like GPT3.5-turbo, GPT3.5-turbo-16k, text-davinci-003 models.

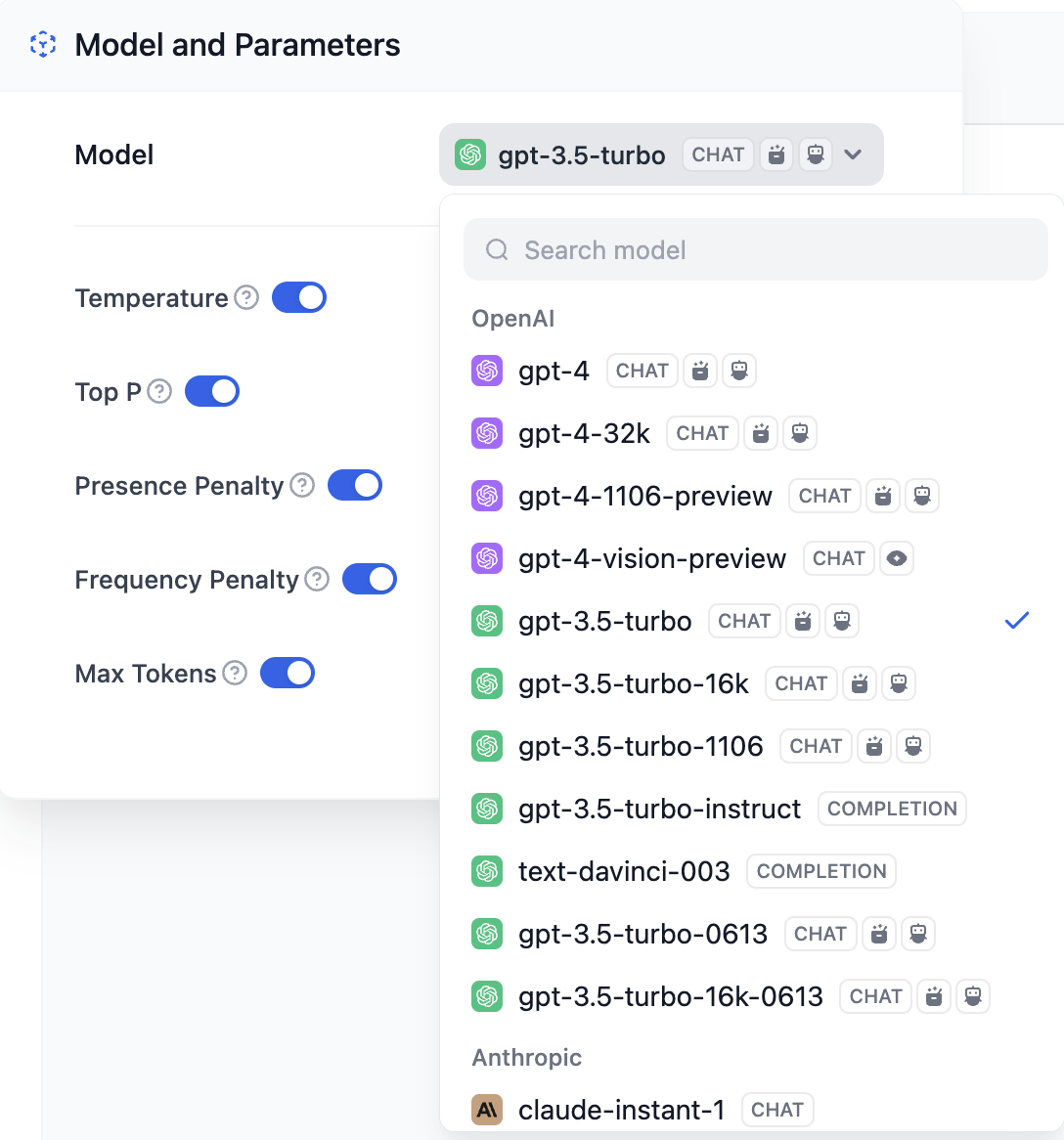

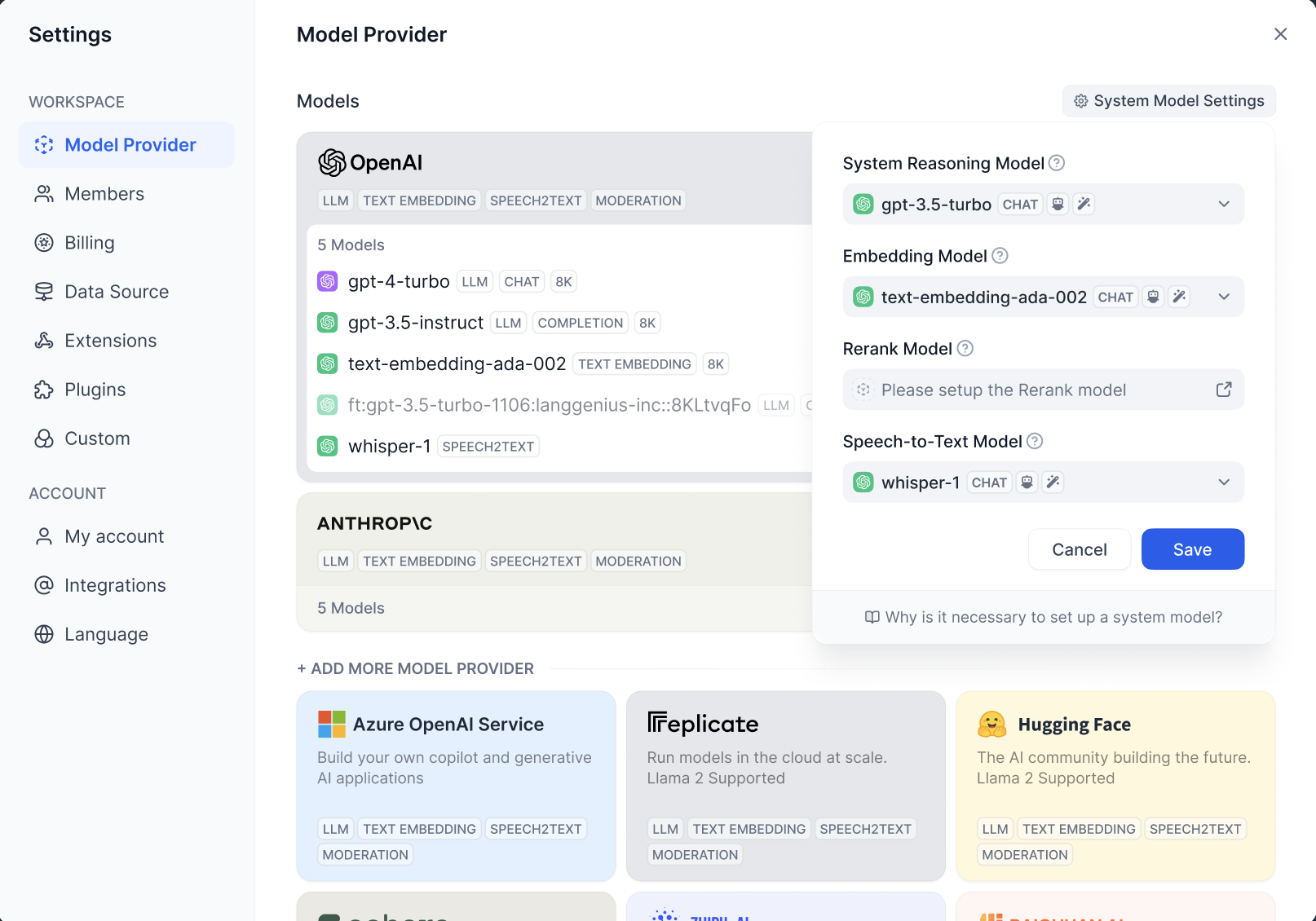

Setting the Default Model

Dify automatically selects the default model based on usage. Configure this inSettings > Model Provider.

Model Integration Settings

Choose your model in Dify’sSettings > Model Provider.

Model providers fall into two categories:

Model providers fall into two categories:

- Proprietary Models: Developed by providers such as OpenAI and Anthropic.

- Hosted Models: Offer third-party models, like Hugging Face and Replicate.

Dify uses PKCS1_OAEP encryption to protect your API keys. Each user (tenant) has a unique key pair for encryption, ensuring your API keys remain confidential.

Using Models

Once configured, these models are ready for application use.